While unpopular presidents see their party lose more seats at midterm elections, the relationship is so noisy that predictions are relatively worthless. What is more, the simple prediction of seat loss from approval leads to predictions for 2006 that are wildly out of line with most informed opinion. This doesn't mean we shouldn't look at the data or the election forecasting models but it does require that we mix our enthusiasm for prediction with some sober realism about the uncertainty in such forecasts.

President Bush's recent decline in the polls has led many to conclude that Republicans in congress are doomed next November. I've pointed out here that the president's approval ratings are (almost) unprecedented for a midterm election, and that we simply don't know what a president with an approval rating in the high 20s or low 30s would do to his party's Congressional fortunes. I stand by that, but at the same time we need to take a look at the historical record of approval, seat change and the noise in that relationship.

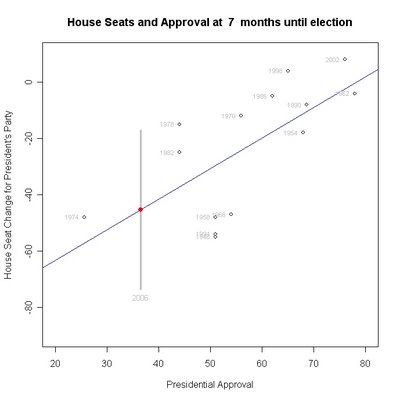

The figure above plots the change in House seats for the president's party in each midterm election since 1946. (I left 1946 out in the earlier post, but reader comments have convinced me to include it.) In all but two years the president's party loses seats. That is one of the most reliable regularities in American politics, or at least it was until 1998. Prior to that, 1934 was the last time a President's party gained seats in a midterm. Before that only 1902 saw a gain since 1860. So to have two midterm gains in a row raises some eyebrows about the possibility that House elections may have changed in some fundamental way in the last eight years. Or maybe they just happen to be two exceptional years and not harbingers of real change.

But there is a reliable relationship between approval and seat change. Each percentage point of approval gained or lost by a president predicts about 1 more seat held or lost by his party in the House. At least when considering all midterms since 1946. By that count, President Bush's decline in approval from 42% to 33% since January should cost his party 9 seats. That's the bad news for Republicans.

But there are two problems with such estimates. The first is that House districts have become much more uncompetitive than in the past, and most analysts believe that the redistricting for 2002 produced some of the most partisan, and hence "safe", seats in history. If that is so, then the past may be a poor predictor of the future. Based on history, a president at 36.5% approval in April (where President Bush was), should expect to lose 45 seats in the House. That is wildly out of line with the best informed opinion which says that the Democrats will be very lucky to gain the 15 seats needed to take control of the House. A loss of 45 seats is far beyond anyone's current expectations. So one issue is whether the past is much of a guide to the current House. Those who know it best think the current House is too well insulated from electoral tides for such predictions to prove valid.

The second problem is the size of the variation in seat change given approval. In 1994, a president with an April approval of 51% found his party losing 54 seats in November. Less popular presidents Carter and Reagan (yes, really) both had April approvals of 44% in 1978 and 1982 but lost only 15 and 25 seats respectively. The variation around the blue regression line in the figure makes clear that for any given approval level, your actual results may vary. A lot.

For President Bush, with an April approval of 36.5 and a predicted seat loss of 45, this uncertainty translates into a range of predicted losses from the ridiculous (-74) to the plausible (-17). That range of uncertainty is so large as to be politically useless for estimating practical consequences. If you read forecasting models, look to see if they provide a confidence interval for their predictions. Most talk about their point prediction (xx seats) and a few about their R-square or the fit within the sample. But very few advertise their confidence intervals for the out of sample forecast. For good reason. It is almost always huge.

What if we cherry pick our data? In the graph above, I see 1946, 1958, 1966 and 1994 don't seem to fit with the rest of the data. If I drop these, then a line through the remaining points fits better and produces a slightly more plausible prediction of -30 seats (with a confidence interval of -45 to -16.) But this grossly overstates my true confidence! How do I know that 2006 will not be like 1958 or 1966 or 1994? Why not take out 1954 which now doesn't look so close to the line? For every decision I make like this, I actually ADD uncertainty to my true confidence interval, not take it away.

So what conclusion should we reach from presidential approval in the months leading to a midterm election? We have a very good basis for concluding that less approval means more seats lost. But the best estimates we can manage, given that we only have 14 elections with approval data, are so imprecise that their political implications are basically worthless. I'd bet a lot (ok, I don't bet so that's a cheap line) that the Republican party will lose seats in the House. And that the lower the President's approval rating, the worse they will do. But to put any meaningful confidence around a single point prediction (say, -15 seats or more) requires much more work and a good deal more calculation than just looking at the history of approval and seat change.

A number of political scientists and a few economists have developed models to forecast election results. Those use more than just approval, and achieve forecasts somewhat more precise than what is possible with approval alone. I'll be writing about those models, and estimating some of my own in the next few weeks. But keep your eye on the confidence interval. The uncertainty is larger than Democrats would like right now, and that's the good news for Republicans.

And to my (former?) friends who do forecasting: show me your confidence interval and I'll show you mine. I'll be happy to be proved wrong with legitimate tight intervals.

Click here to go to Table of Contents